OpenVLA is a 7B parameter open-source vision-language-action model (VLA) pretrained on the Open X-Embodiment dataset. It is a representative of generalist robot manipulation policies that can generalize to open-ended tabletop scenarios.

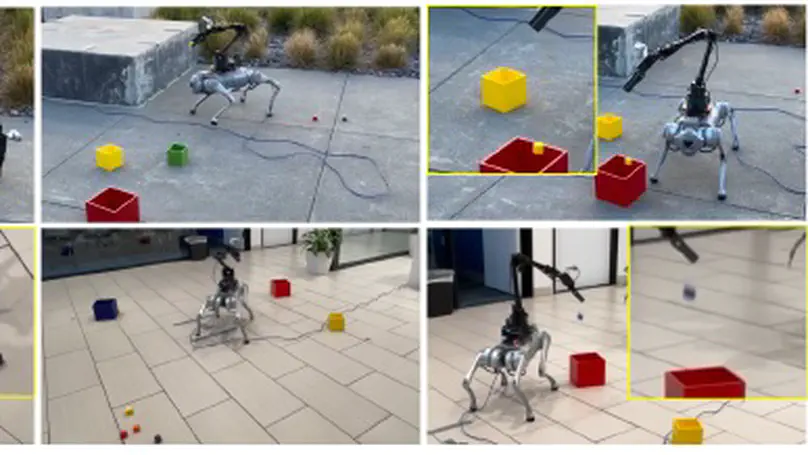

What is SLIM? SLIM is a low-cost legged mobile manipulation system that solves long-horizon real-world tasks, trained by reinforcement learning purely in simulation. Some deployment demos of SLIM are below:

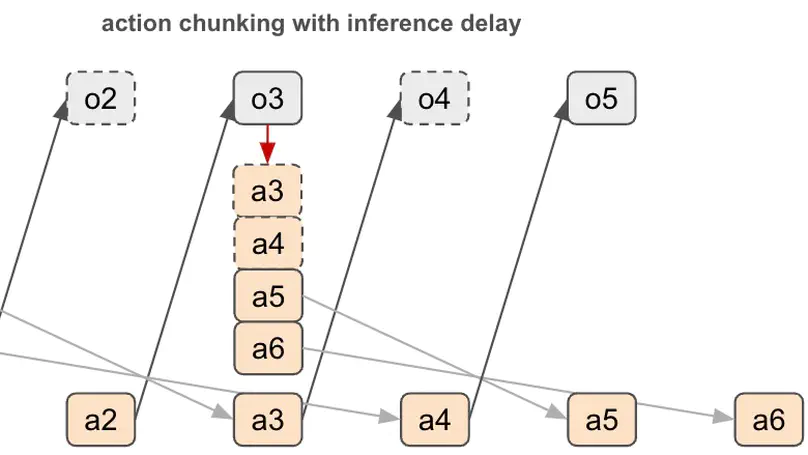

Action chunking is an open-loop control where at every control time step, a policy outputs a chunk (sequence) of actions into the future given the current observation. Usually the action sequence will be fully or partially executed before the next control time step.

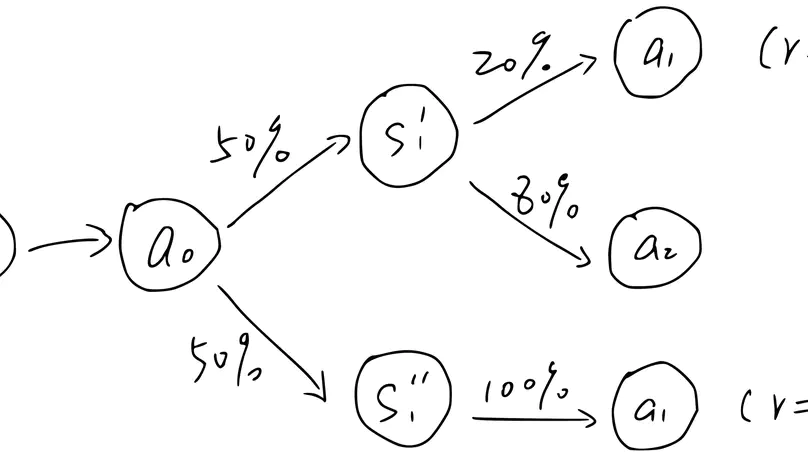

A current trend of combing LLM (LVM) with robotics is to first use an LLM to decompose a high-level task into several subtasks given the instruction and scene image. For example, given an instruction “Put all toys into the basket.

Vision Sim2Real Gap Two types of robot sensors When diving into the world of robotics, it’s crucial to understand the fundamental role sensors play in guiding a robot’s actions. Broadly speaking, sensors fall into two main categories: proprioceptive and exteroceptive.

Physics Sim2Real Gap (continued) System identification errors Controller in the loop of policy control A robot typically consists of multiple actuators such as gear motors, each tasked with moving one or more joints.

Why Scaling Hasn’t Happened in the World of Robotics Navigating the landscape of general-purpose robots today, one of the most pressing challenges we face revolves around the lack of data. This scarcity of data, particularly in terms of robot action, stands as a major roadblock hindering the widespread adoption of deep learning models within real-world robotic applications.

The output of large language models can be divided into two categories: the first is philosophical thoughts that are metaphysical and cannot be confirmed or falsified by current scientific methods, such as the meaning of life, the appearance of extraterrestrial beings, the existence of the human soul, and so on.

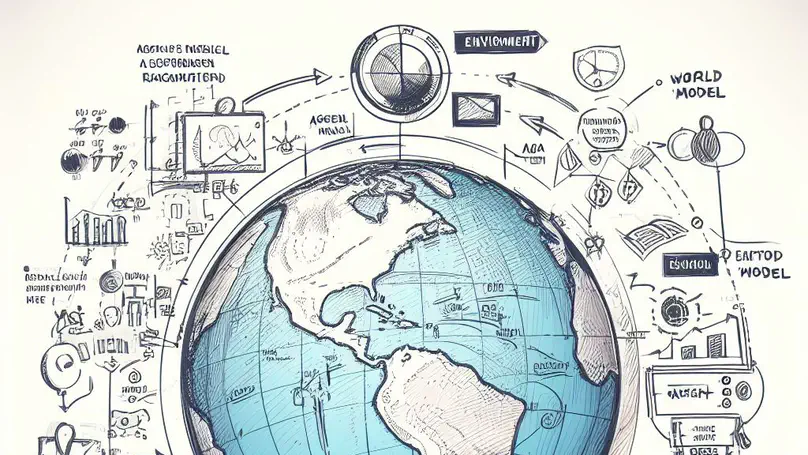

The short answer is, they do learn, but they also fail to learn world models. The title of this article encompasses two concepts: “large language models” and “world models.” In the current wave of AI, the definition of the concept of large language models is likely well understood.

I’ve been asked by quite a few people about what “language grounding” means. So I think I’ll write a short post explaining it, and specifically arguing why it is important to a truly intelligent agent.

Recently, I was working on a project that requires learning a latent representation with disentangled factors for high-dimensional inputs. For a brief introduction to disentanglement, while we could use an autoencoder (AE) to compress a high-dimensional input into a compact embedding, there is always dependence among the embedding dimensions, meaning that multiple dimensions always change together in a dependent way.

The other day I was reading the article “You and your research”, transcribed from a seminar by Richard Hamming. There is one paragraph about “choosing important problems” which I think is inspirational: